Today, we are going to discuss one of the Anypoint Connectors, Apache Kafka which enables you to interact with the Apache Kafka messaging system and provides seamless integration between your Mule applications and an Apache Kafka cluster, using Mule runtime engine (Mule). This tutorial assumes you are familiar with Mulesoft (Mule), Anypoint Connectors, and Anypoint Studio Essentials.

For this purpose, the below index shall be followed:

|

|---|

1. Introduction

Integration platforms offer a vast range of out-of-box connectors to enable businesses to connect to their applications or data sources. Connectors bring the entire business ecosystem together and solve the complex integration use-cases. They abstract the technical details involved with connecting to a target system.

An enterprise generally needs connectors for either to send and receive data from applications/data sources or to connect to a specific application using a specific protocol and exchange data using a specific data format. Most of the integration platform providers in the market offer out-of-box connectors, readily available through their hub or repository.

Mulesoft has Anypoint Connectors which are reusable extensions to the Mule runtime engine that enable you to integrate a Mule app with third-party APIs, databases, and standard integration protocols.

1.1 Associated Use Cases

- Log aggregation – Leverage the low-latency processing from Kafka to collect logs from multiple services and make them available in standard format to multiple consumers.

- Analysis and metrics – Optimize your advertising budget by integrating Kafka and your big data analytics solution to analyze end user activity such as page views, clicks, shares, and so on, to serve relevant ads.

- Notifications and alerts – Notify customers about the various financial events, ranging from the recent amount of a transaction, to more complex events such as future investment suggestions based on integrations with credit agencies, location, and so on

2. Components we require to configure Apache Kafka with Mulesoft

Below we will explain the necessary components that we will need to be able to configure Apache Kafka with Mulesoft.

- Apache Kafka

Apache Kafka is a distributed messaging system, providing fast, highly scalable messaging through a publisher-subscriber model. It is available and resilient to node failures and supports automatic recovery. Apache Kafka has robust topics that can handle a high volume of data and has an enabler to pass on the messages from one endpoint to another. Apache Kafka is suitable for both offline and online message consumption.

Apache Kafka is built on top of the Apache ZooKeeperTM synchronization service. All Kafka messages are organized into topics.

- Mulesoft Apache Kafka Connector

Anypoint Connector for Apache Kafka (Apache Kafka Connector) enables you to interact with the Apache Kafka messaging system and achieve seamless integration between your Mule app and a Kafka cluster, using Mule runtime engine (Mule). It addresses the need of the Mulesoft customer who wants a user-friendly way to integrate with Apache Kafka to create producers, consumers and perform send message and receive message operations.

- Mule Design-time: Anypoint Studio

Anypoint Studio is Mulesoft’s design-time integration environment (IDE). It allows organizations to create, orchestrate services, capture, and publish events from internal or external applications and technologies. Developers can rapidly develop, and test graphically, integration processes in a no-code, standards based IDE. These processes are then deployed in a reliable, highly available and scalable architecture to support mission critical applications.

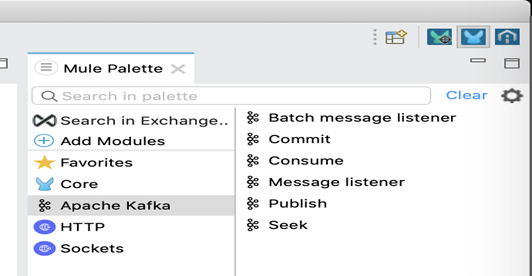

- Apache Kafka palette

The Apache Kafka palette can be used to create producers, consumers, and perform send message and receive message operations. The plug-in provides the following main functions:

- Publish, Consume

- Message Listener

- Batch Message Listener

- Commit and Seek

2.1 Required versions

- Java [Ver. 1.8] Download

- Mule Design-time: Anypoint Studio [Ver. 7.8.0]: Download

- Mule Run-time [Ver. 4.3.0 EE]: Download

- Mulesoft Connector for Apache Kafka [Ver. 4.4.x] Download

- Apache Kafka [Ver. 2.7.0]: Download

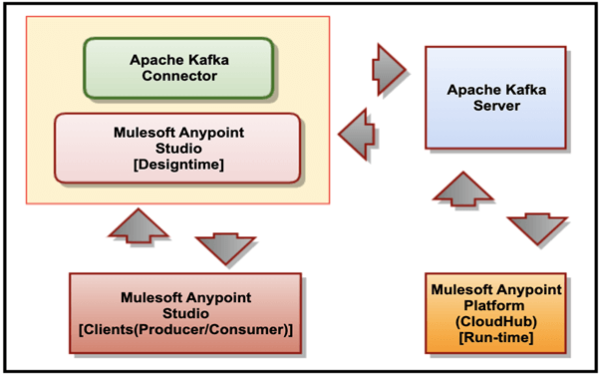

3. Overall Architecture

The following figure describes the relationship between the Apache Kafka Server, Mulesoft Connector for Apache Kafka, Mulesoft Anypoint Studio and CloudHub.

The following list describes the relationship between different products:

- Mulesoft Connector for Apache Kafka plugs into Mulesoft Anypoint Studio and connects to a Kafka server instance.

- Mulesoft Anypoint Studio is the graphical user interface (GUI) used by Mulesoft Anypoint Studio and the plug-in to design business processes, and it is also the process engine used to execute them.

- Mulesoft Anypoint Platform (CloudHub) provides a centralized administrative interface to manage and monitor the plug-in applications deployed in an enterprise. (This component is out of scope in our current discussions.

4. Apache Kafka Connector Installation and Configuration

In Anypoint Studio, add Anypoint Connector for Apache Kafka (Apache Kafka Connector) to a Mule project, configure the connection to the Kafka cluster, and configure an input source for the connector.

Prerequisites

Before creating an app, you must:

- Have access to the Apache Kafka target resource and Anypoint Platform

- Understand how to create a Mule app using Anypoint Studio

- Have access to Apache Kafka to get values for the fields that appear in Studio

4.1 How to add the Connector in Studio Using Exchange

Here are the steps to follow in order to add the connector.

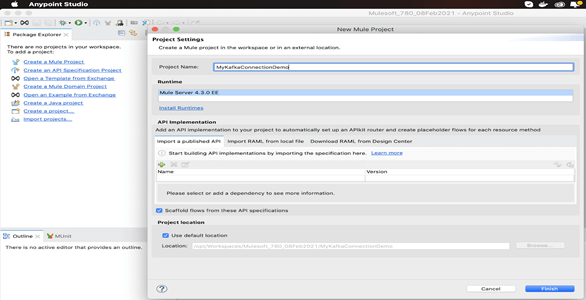

1. In Studio, create a Mule Project.

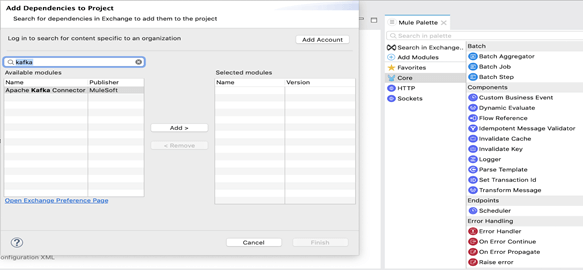

2. In the Mule Palette, click ‘Search in Exchange’. Type the name of the connector in the search field, ‘kafka’ and press ‘Enter’.

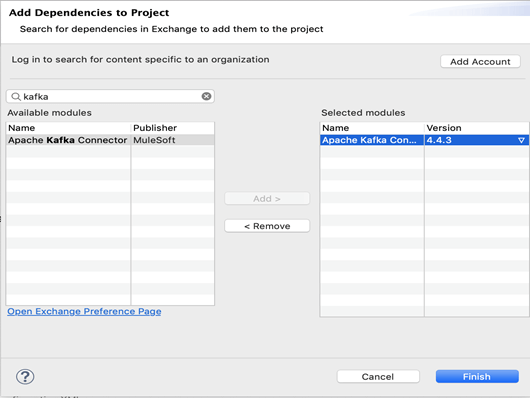

3. Click the connector name in ‘Available modules’.

4. Click Add to include the same in ‘Selected modules’ and click ‘Finish’.

5. We can now observe in Mule Palette, Apache Kafka module and respective activities.

6. We can now proceed to create a Process Flows.

5. Process Flow to Publish and Consume a Message

Here, we will develop two Process flows in Mule Anypoint Studio which will have Message Publisher in one process where will publish a message on Kafka Topic and another process where will have Subscriber which will receive a published message over same Topic.

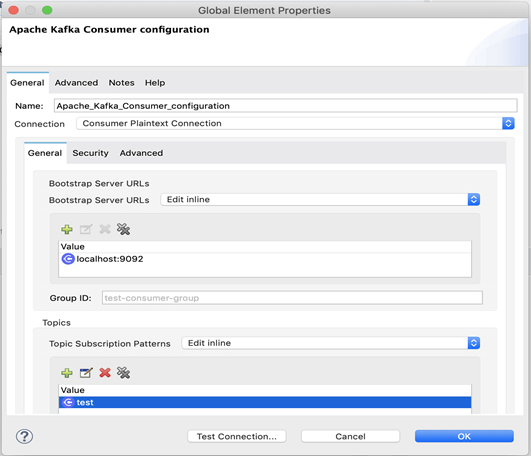

5.1 Consumer shared resource configuration

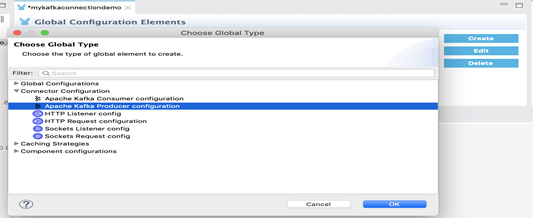

1. Create a consumer configuration global element.

2. You can create a consumer configuration global element to reference from Apache Kafka Connector. This enables you to apply configuration details to multiple local elements in the flow.

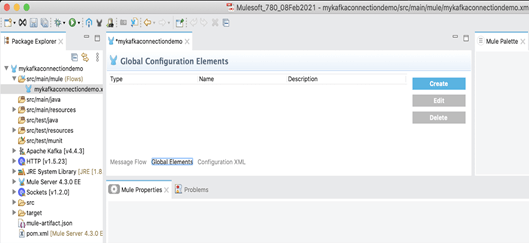

3. On the Studio canvas, click Global Elements.

4. Click Create and expand Connector Configuration.

5. Select Apache Kafka Consumer configuration and click OK.

6. In the Connection field, select a connection type.

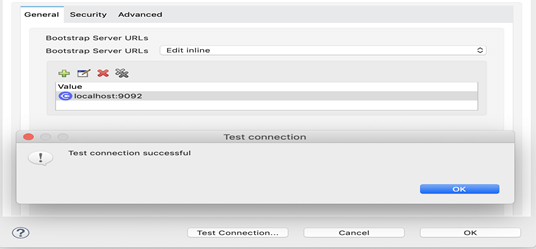

7. Make sure Kafka Zookeeper and Kafka Broker Server are running.

8. Then to confirm the connection with Kafka Server, click ‘Test Connection’ button.

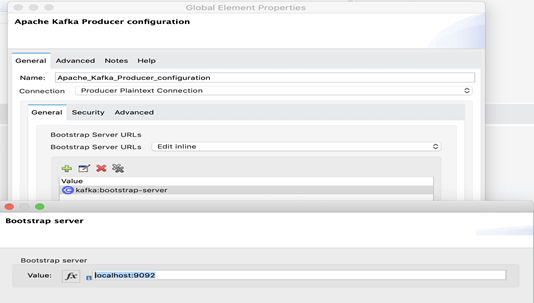

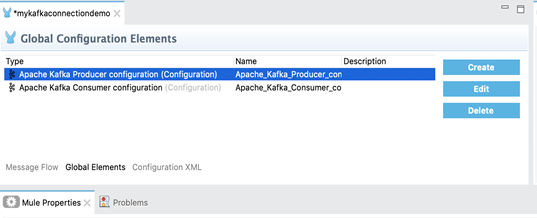

5.2 Producer shared resource configuration

1.Now will create a producer configuration global element as we created for consumer in previous step.

2. You can create a producer configuration global element to reference from Apache Kafka Connector. This enables you to apply configuration details to multiple local elements in the flow.

Next, we will create Producer and Consumer Process flows using respective Connector Configurations created.

5.3 Message Producer flow

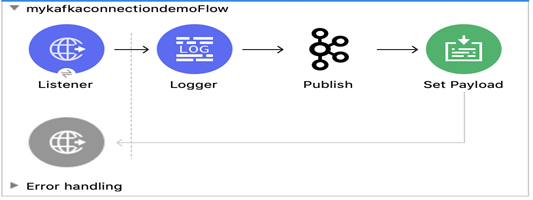

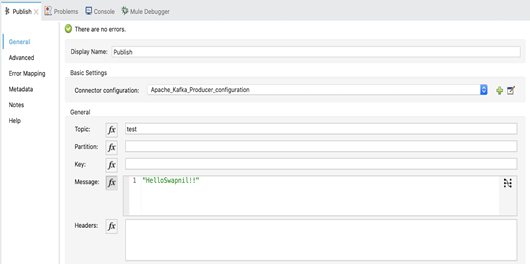

Now, will create a Process flow to publish a Message into the Kafka Topic using Kafka Publish activity.

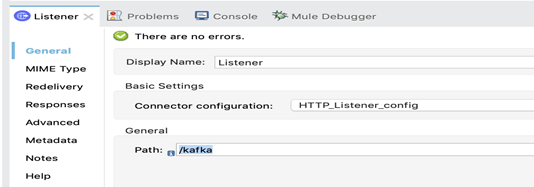

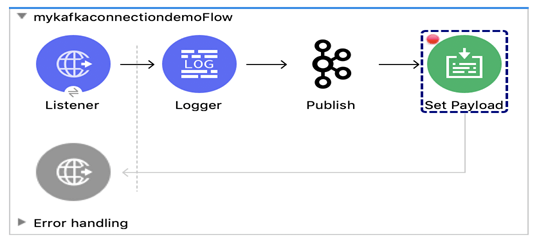

1. Create a simple process flow for message publisher as below.

2. HTTP Listener will be listening on link ‘http://localhost:8081/kafka‘

3. Populate Topic name and Payload in Publish activity as below.

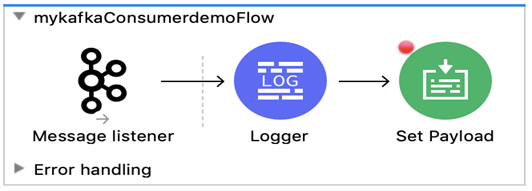

5.4 Message Consumer flow

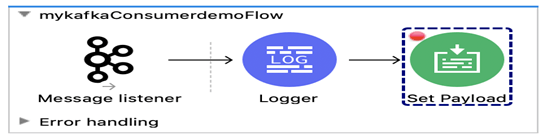

Now, will create a Process flow to receive a Message from the Kafka Topic using Kafka Listener activity.

1.Create a simple process flow for message consumer as below

2. Create a Kafka Consumer Connection with specified details.

6. Testing to confirm E2E Process Flow

Now will test the Process flows by invoking Publisher process which will receive a message from user and publish it on Kafka Topic. The Subscriber Process flow which is listening on the same Topic will receive a Message which user has sent.

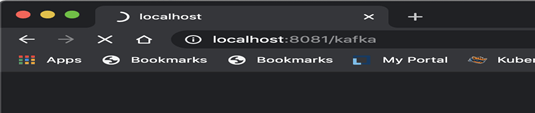

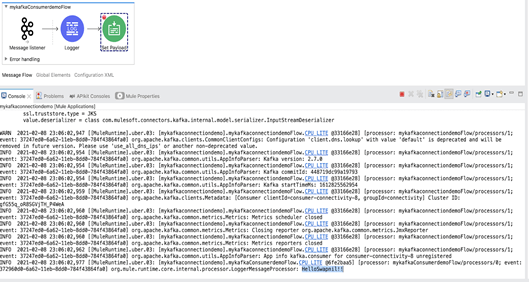

1. Now start the process in debug mode, and invoke URL in browser as shown below.

2. It will initiate the Mulesoft Publisher process flow and Kafka Publish activity will publish a message on topic, ‘test’.

3. Activity Kafka Consumer activity consumes the same message which is eventually getting logged in console.

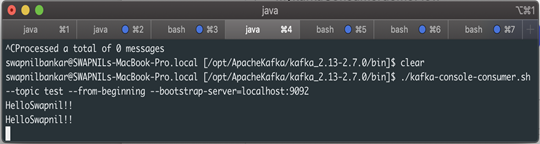

./kafka-console-consumer.sh –topic test –from-beginning –bootstrap-server=localhost:9092

4. At Consumer Process flow, you will find the same message subscribed on topic, ‘test’.

5. Logger activity will log the same message in the console as shown below.

7. Conclusions of connection with Mulesoft

We set up Apache Kafka and Kafka Connector with Mulesoft. Then we made consumer client listening on given Topic at Apache Kafka. Eventually, we have created 2 process flows, Message Publisher to publish the message over topic, ‘test’ and Message Listener to listen over the topic, ‘test’. Finally, we received the message at Kafka consumer who was listening on the topic, ‘test’.