There is growing momentum towards “Event-Driven” integration architecture, but exactly how new is this? Isn’t this just a Pub/Sub pattern? And where does it fit alongside Event Streaming, Microservices, Machine Learning, Robotic Processes and other modern paradigms?

At Chakray, we are experts in all things integration, from traditional data transfer to service oriented architectures and the ESB, and to the more modern microservices approaches.

In this article we’ll explore what Event-Driven Architecture really means in the world of integration and help you understand if this approach delivers on the hype and could be right for you.

1. Event-Driven Architecture Description and Scenarios

1.1 What is Event-Driven Architecture?

Event-Driven architecture is traditionally a software architecture pattern, with characteristics of being distributed, asynchronous and highly scalable

1.2 Event-Driven Architecture Scenarios

Let’s take a look at a real world scenario where event-driven architecture can be put into use:

“Your mobile phone has been stolen”

A typical scenario like this could be represented as a sequence of events:

Report Phone Missing –> Receive Crime Number –> Tell Insurance Company –> Order New Phone –> Transfer Numbers …

This is a simple view because of course there are many other steps such as notifying friends and family, changing social media passwords, replacing sim cards and more.

Let’s take another view of the scenario, inspired by an event driven approach:

Publish Missing Phone –> Shouts Message

- Police listen

- Insurance Company listen

- Family listen

- Friends listen

- Contract provider listens

This is a simplification, but the fundamental concept is clear, that an event happens that is listened to by interested parties who then respond appropriately to that event. This really emphasises the “Driven” part of the Event Driven paradigm, which really is the key differentiator from other patterns.

The Architecture part of the paradigm refers to the fact that conceptually all moving parts of the scenario have been designed in such a way as to support the “Event” or the “Event Stream” as the focus of the scenario.

2. History of Event-Driven Architectures

Haven’t we already been doing this for years?

Well the answer is yes, and from an integration perspective, this will be commonly known as publish/subscribe. The “Event” is published and subscribers listen and react.

Several things however have led to the increasing necessity for an event-driven approach in recent years.

- Larger amounts of data

- Prominence of distributed applications and services

- Dynamic scaling of systems and applications

- Expectations of instantaneous responses and actions (customer experience)

- Proliferation of Microservices

- Increased need for real time Business intelligence and agility

- Rise of IoT and “Smart” things and devices

This list is not exhaustive, and there is clear cause and effect between the list items.

Arguably much of this stems from a consumer-driven, service-led civilisation. Largely consumers expect everything to be available on demand, and they expect minimum friction. Nowadays we have taxis when and where we want them, movies and TV on demand, lights that turn off when we tell Alexa to do so, instant and convenient have become the norm.

We already have Pub/Sub, so what’s the problem?

Let’s change the perspective of how we view this from looking at problems to looking for opportunities. Event-Driven can be exploited far beyond the simple concept of a publish/subscribe pattern. This is essentially where concepts such as Event Streaming come into play.

Traditionally publish/subscribe is “publish once and then hope the subscribers have heard”. You can leverage retries, queues and other mechanisms to ensure delivery is successful, but the very nature of the pattern means the publisher doesn’t know who is subscribing and whether all critical subscribers received it. In the stolen phone analogy, what happens if the insurance company fails to receive the message?

With an Event Stream, the published events are stored as an ongoing log of the events. This means the entire history of events is maintained and available. If a subscriber is unavailable or temporarily unsubscribed at the moment an event was published, then as soon as the subscriber re-subscribes, the missed event is still available to process.

3. Event-Driven Architecture in today’s integration landscape

Now we have some background on Event-Driven Architecture, Publish/Subscribe and Event-Streaming, let’s address the remaining points of the article :

- How does Event Driven intersect with other paradigms such as Microservices?

- Is Event Driven Architecture the answer to everything?

- Why wouldn’t I explore Event-Driven?

A common misconception is that an architecture can either follow one integration paradigm or another. For example you may hear people say “we have a microservices integration architecture” or “we have a monolithic ESB architecture”. Architectures can in fact have many different styles applied to it and still be valid.

We’re not going to explore the connotations of the word architecture in this article, but ultimately an Event-Driven Architecture will co-exist and often requires a complimenting Microservices architecture to be effective. A combination of the two can be described as an Event-Driven Microservice Architecture. Similarly, benefits can be realised in a more monolithic architecture combined with an Event-Streaming capability.

Many of the drivers and influencers behind a Microservices Architecture are the same for Event-Driven, they both help to overcome the same challenges.

Consider, however, a Microservices Architecture with a traditional, synchronous, request/reply integration approach; The Microservices Architecture enables an independent, atomic service that has an independent lifecycle and typically therefore an independent team responsible for it. If that service relied on a request/reply integration flow with another microservice (for no specific reason) then the service would not be loosely coupled and therefore not truly independent. You could not upgrade/evolve the service without concern for the coupled service.

You do not necessarily need to be Event-Driven to overcome this, an alternative asynchronous pattern such as a message queue can work here, but this demonstrates how the characteristics of an Event-Driven architecture and Event-Streaming can interplay positively with Microservices.

3.1 Opportunities for an Event-Driven Architecture

So far the focus of this article has largely been from an integration perspective, in which there is a clear place for Event-Driven, Publish/Subscribe, and Event Streaming, but let’s explore some opportunities involving other modern concepts such as AI, Machine Learning and Robotic Processes.

We explained earlier that Event Streaming maintains a comprehensive store of past events alongside the live events. The stream can be distributed across many locations, and replicated across locations, meaning it can be designed to handle huge volumes of events, and therefore data. Go back to the missing phone analogy, and imagine now a record of every event, past and present, where a person reported a lost or stolen phone.

Think about all the data, what can we learn from it and how can we use it?

- Times of day / week / month / year when loss and theft is most likely.

- Locations where it happens the most?

- What about the common characteristics of people it happens to the most?

Imagine if an insurance provider of mobile phones could access this event stream and combine it with an event stream that records the events of people buying new phones. The data has now become intelligence, which can enable the insurance provider to dynamically adjust prices, promotions and sales efforts in order to maximize revenue.

This is one example, but imagine the possibilities here when thinking about other scenarios more aligned with your industry.

None of these outcomes are particularly new, projects have been taking place for years leveraging and analysing big data, but Event Streaming makes it orders of magnitude more simple, allowing organisations with an established data science practise to go further and creating lower barriers for companies who could not previously justify an investment in this space.

3.2 Pitfalls to avoid when adopting an Event-Driven Architecture

Before we conclude the article, let’s address the balanced side of the argument, and when an Event Driven approach would not be beneficial.

The primary issue with “Event-Driven” is complexity, which often has a direct relationship with cost. Whilst it’s clear to see the benefits, setting up distributed, highly available, real-time event logs is not a trivial undertaking, and requires serious planning.

Here are some common pitfalls when adopting Event-Driven Architectures:

- Capturing too much or ambiguous information

It’s easy to get carried away creating events for everything. Whilst these events “could be useful in the future”, they will certainly create additional complexity and development work. Not everything needs an event, maintain focus on what “is useful today”. Events should be easy to understand for all teams and should not be difficult to explain

- Using Events when you shouldn’t

This may seem obvious, but it’s a common mistake to attempt to make everything fit into “Event-Driven”. By design, the architecture is loosely coupled and asynchronous, so in scenarios where a response is needed from a known recipient, in a specific timeframe, is this the best approach? There are many situations where the approach could do more harm than good.

The ROI or Business Value should also be considered when evaluating the design for a flow or scenario. For example does the total cost of ownership of an Event Stream relating to “Employee Holiday Requests” really deliver on the investment? Maybe in some circumstances, though, the point is, a pragmatic, value-based approach is always recommended.

- Technology ownership

The event driven journey often starts with a use case which is non-business critical. For instance it may be implemented to help decouple an application. In this scenario it becomes easy for the technology to be viewed as an application enabler which drives ownership from application development. To leverage the best outcomes, it requires solid data design and integration thinking to be successful as a long term enabler in an organization.

- Trying to run too quickly

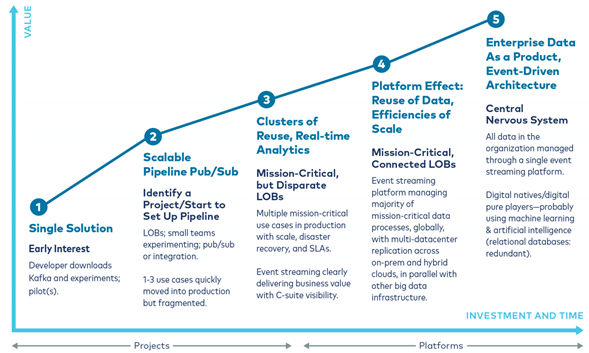

It’s often the case that an organisation may focus on the ultimate goal of “being the most automated intelligent pharmaceutical company in the world” and try to tackle every use case in a big bang approach. This is a recipe for disaster, and will more likely increase the time to desired state. The best implementations start small and grow with time, understanding and experience.

To help with this, Kafka market leaders Confluent™ have provided this adoption curve:

- Impossible expectations

Event-Driven does not and will not solve everything. Dysfunctional teams will still exist if they exist today. Poor automated testing and deployment capability will still exist and will not be solved by adopting this approach. Many opportunities can be realised with an Event-Driven approach but it certainly won’t solve everything.

From a technical perspective, the obvious scenarios of when it may be better to use something like REST or GraphQL as opposed to events are:

- You need a request/reply interface within a specific time frame e.g. an instant credit check

- A transaction is needed that needs to be easy to support

- An API needs to be made available to the public

- The project/requirement is low volume

Conclusion

So, to summarise, it’s clear that an “Event-Driven” approach has fantastic benefits that are becoming essential for organisations to survive and thrive in an increasingly competitive, consumer-driven landscape.

It is however, not to be taken lightly, more importantly than this particular approach, is the overall integration strategy, and the right partnerships to help navigate through the integration jungle and avoid the common pitfalls.

No single style is the panacea, it is a combination of the most appropriate solutions at the right time that will lead to success.